You probably already know that you can conduct meaningful usability tests with as few as five users. This widely-adopted tenet of UX research and design has endured two decades because it’s both surprising and heartening. After all, it shows that UX research doesn’t have to be expensive and time-consuming. To the contrary, it can be quick, accessible, nimble, and cost-effective. That’s good news. And in general, the guideline holds true.

However, it’s important to recognize that the “five users rule” is more rule of thumb than rule of law. There are some exceptions to this tried-and-true baseline. Depending on your product’s needs, it may be necessary to take a different approach to get the most useful results.

So, how many users do you really need to get the best test results? Read on to find out.

The Rule of Five: Why Five Users are Usually Enough

To understand why five users is the standard for most usability tests, it helps to look at a few simple numbers. Here’s the deal: Testing with five users will help you uncover an average of 85 percent of a product’s usability issues. Testing with ten users, meanwhile, will help you find an average of 95 percent of issues. Twenty users will identify 98 percent of problems, and thirty users will find 99 percent. As you can see, the return on investment of recruiting more users quickly becomes incremental.

Percentage of Total Known Usability Problems Found in 100 Analysis Samples

| Number of Users | Minimum % Found | Mean % Found |

|---|---|---|

| 5 | 55 | 85.55 |

| 10 | 82 | 94.686 |

| 15 | 90 | 97.050 |

| 20 | 95 | 98.4 |

| 30 | 97 | 99.0 |

| 40 | 98 | 99.6 |

| 50 | 98 | 100 |

Source: Faulkner, 2003

If your EdTech company takes a lean and iterative approach to product development and UX, then it makes logical sense to start with five users. This enables you to quickly and efficiently test your product, identify the majority of issues, and then use that data to refine the design before testing again. It’s a testing methodology that goes well with agile product development.

For EdTech companies that are skeptical about the time and cost associated with user testing, the “five users” rule comes as a relief. It helps them see that UX research doesn’t necessarily mean a major commitment.

On the other hand, a pool of just five users can start to seem very small when the results start to roll in. That’s especially true if the results are close — 3 to 2 in favor or against — in a scenario where the team itself may be divided on the best way forward. In this case, the data may not feel as meaningful.

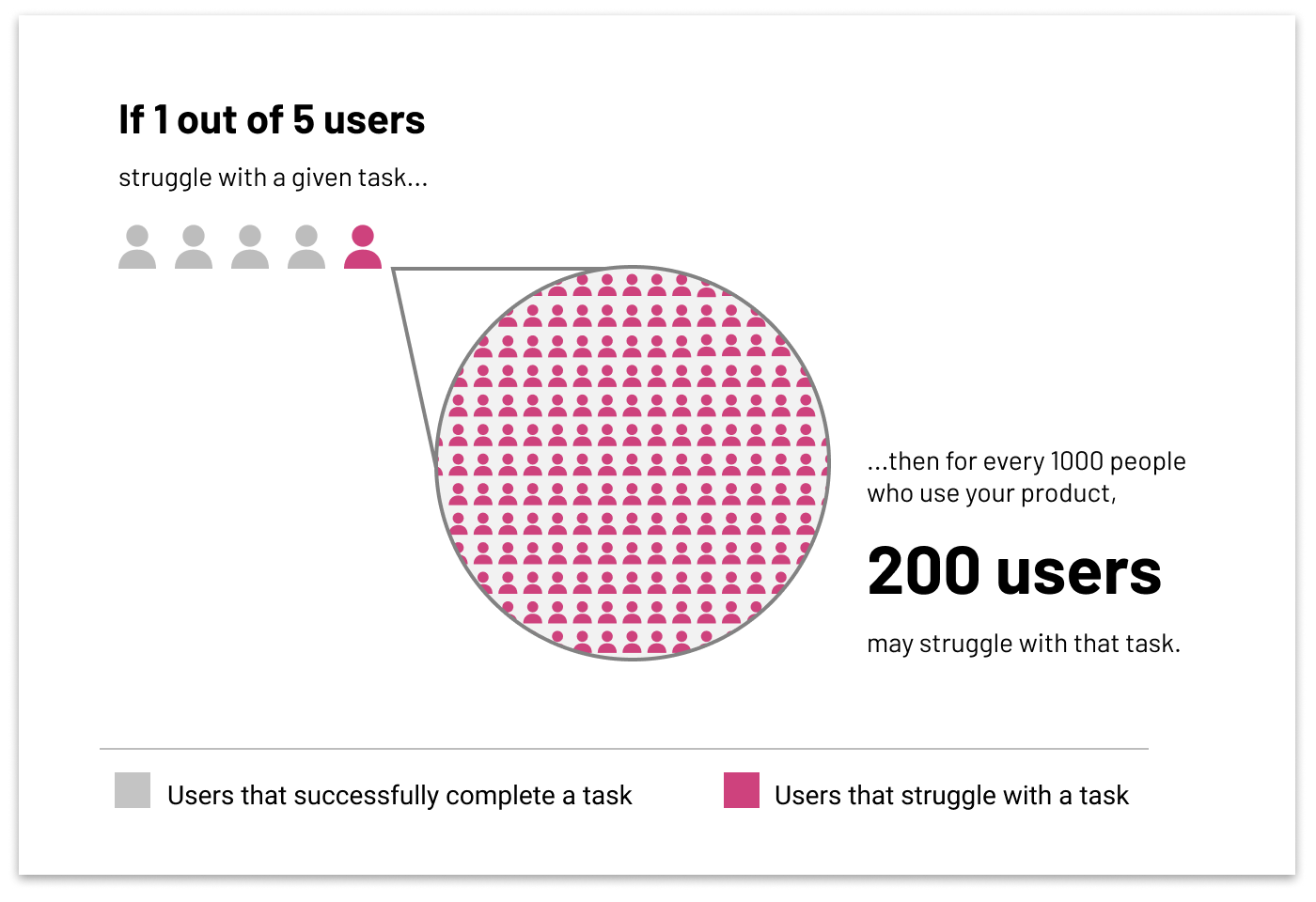

Even when the results are crystal clear, it’s still important to think through what they might mean at scale. For example, if just one user out of five users fails to complete a significant task, you may be tempted to conclude that you don’t have a problem. Yet the test is implying that, at scale, up to 20 percent of your user base may struggle with a particular task or workflow. All of a sudden that sounds less like an edge case and more like a major problem.

Just one in five users struggling with a task might not sound like a big issue. At scale, however, 200 may be struggling for every 1,000 users of your product. That one user might just be an anomaly, but could just as easily represent a much bigger problem.

Indeed, a single round of testing with just five users might not be enough to guide your team in making all the right UX decisions for your EdTech product, but it will help prioritize immediate next steps based on user feedback and the amount of risk/reward your business is willing to take with the findings.

Finally, keep in mind that you should always plan to recruit at least five users from each of your key target audiences, assuming their needs and behaviors are markedly different. For example, let’s say your product is geared toward both students and instructors. If that’s the case, you’ll need to include at least five students and five instructors in each round of testing — unless you are testing a portion of the product that will only be used by one of the two groups. Note, however, that if there is some overlap in behavior between the groups, then you can usually get away with fewer users in each group. When planning your target audiences, don’t forget that new users and experienced users can have very different needs, too—based on their accumulated understanding of your product. If you’re designing for both, you’ll likely need five experienced and five novice users as part of your study.

Choosing the Right Sample Size: When Five Users Aren’t Enough

Five users is the standard for most UX research. In most cases, it’s a safe bet. But there are at least a few instances in which five users just won’t cut it.

- Quantitative research. Qualitative research is the heart and soul of UX testing. It is concerned with discovering why users behave the way they do when they interact with a product. But there’s a time and a place for quantitative testing, too. For example, quantitative UX research, which often takes the form of surveys, is ideal for confirming the existence of a problem or verifying qualitative findings. If you need to employ quantitative testing, Nieslen recommends you plan to start with at least 20 users. You can increase your numbers from there based on confidence intervals. Check out this great set of calculators by MeasuringU designed to help you determine the optimal amount of subjects for your testing.

- Preference testing. If you are trying to assess your users’ preference between two different designs, five users may not be enough. If three of your five users favors one design over the other, the results might not be predictive of your larger user base. It may still make sense to start with five users, but be prepared that you may need to go back and do another round or two of testing if your results aren’t crystal clear.

- Problems that only impact a very small percentage of users. If you find you need to address a problem that only affects a small minority of users, five test users likely won’t cut it. Of course, this situation requires you to focus on edge cases. While edge cases may be less frequent, it’s incumbent upon you to consider your business priorities and to calculate risks and costs associated with moving forward. And remember – there are some special considerations, such as accessibility concerns that you should pay attention to. In those cases, you may find it necessary to recruit more users — or assemble a group of five users that are sure to be impacted by the issue.

Does Five Users Really Mean Five Users?

In most cases, it makes sense to start with five users per target audience when you conduct usability tests. Starting small enables you to move quickly and efficiently as you seek to uncover problems and rapidly iterate on your product.

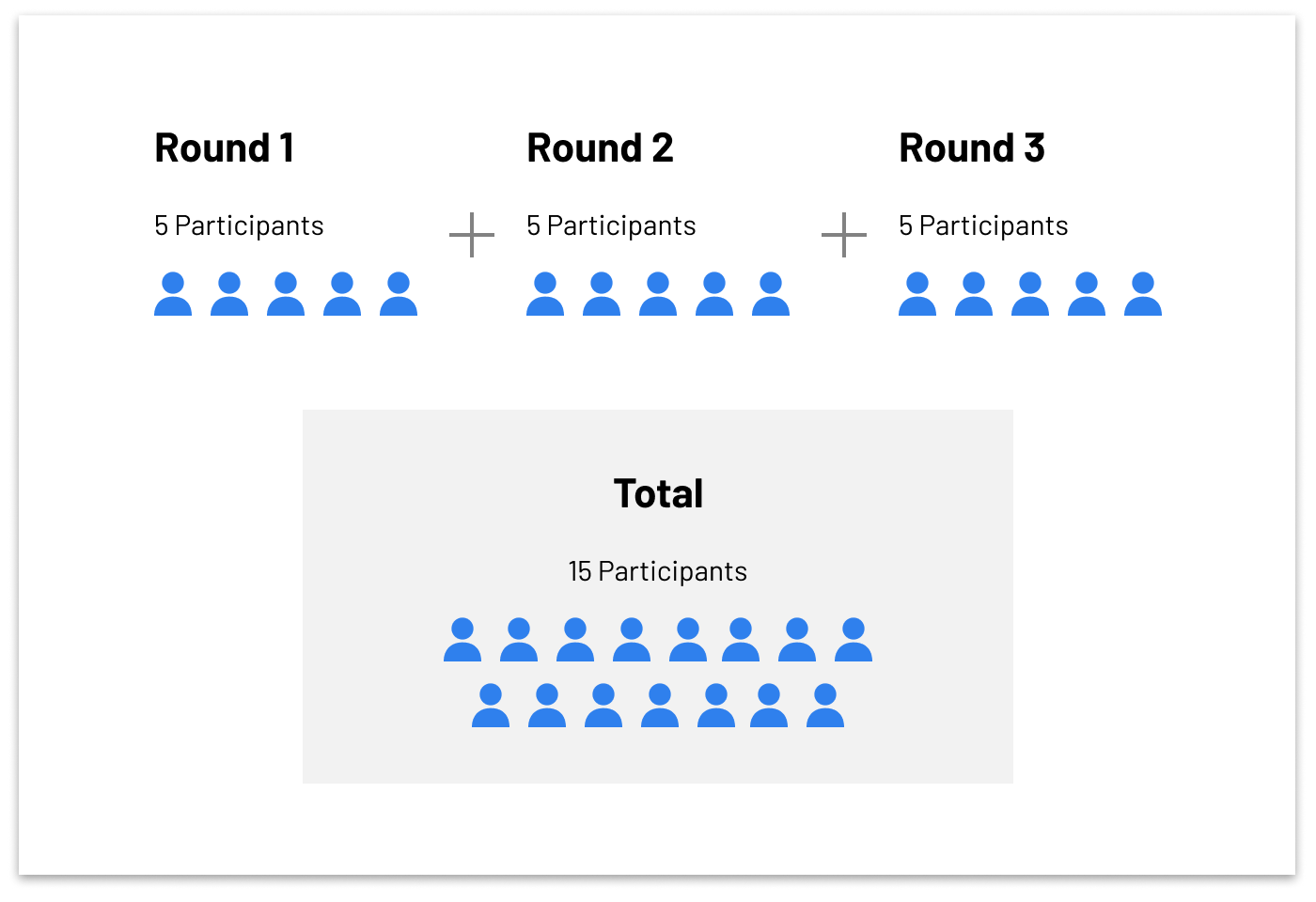

However, usability testing should never be thought of as a one-and-done task that can be ticked off the product development to-do list. UX testing should be incorporated in every phase of the development process. By the time you take your product to market, you will likely have received feedback from 25-30 test users.

When testing with five users each round, it’s important to continue iterating and testing. After time, you’ll have validated with a more substantial pool of participants.

In addition, with each round of lean, five-person testing, you may find that further testing is needed before you can consider your current round of testing complete. By setting expectations ahead of time, your team can move forward with an iterative strategy that everyone feels comfortable with — and that best supports your EdTech product.

When it comes to determining how many users to include in your UX research, you must first understand the problems you are trying to address when planning a test. By right-sizing your user pool at the outset, you can bypass unintended conflicts and uncertainty that may arise from meaningless or unclear test results. And that means stronger feedback from each round of testing — and a finished product that meets your users’ needs.