MIT Libraries Framework

More data shouldn't mean less clarity: How we helped MIT Libraries find focus through framework design.

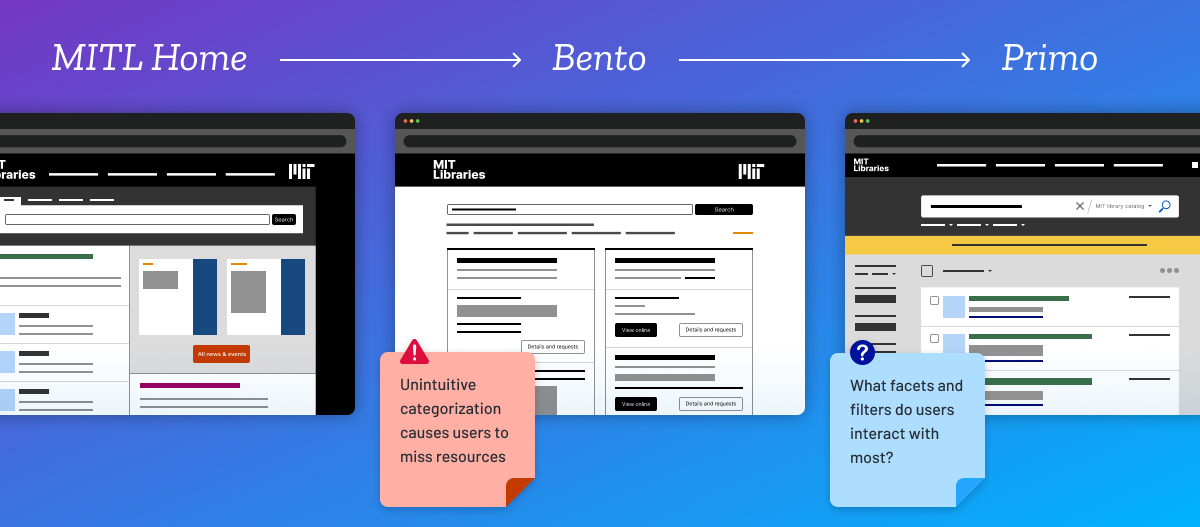

When MIT Libraries found themselves with extensive user research across their many systems — including their library home page, Bento, Primo, and numerous departmental tools — but no clear way to prioritize next steps, they partnered with Openfield to build a diagnostic framework that translated data into decisions. Together, we developed a system that transformed longstanding complaints and vague hunches about these varied library systems into a structured approach for collecting and using data in a way that works better across teams, tools, departments, and priorities.

Even if you think you have the data, driving change in a large organization is hard.

MIT Libraries wasn’t lacking data – they had 40+ user interviews, detailed user insights, and rich systems for collecting user behavioral data. They had a lot of insights but no clear direction.

They needed a way to connect these insights across siloed teams, tools, and collections (i.e. Bento, Primo, DSpace, Dome, etc.) to validate where to focus their limited resources.

Instead of drowning in data, we helped them identify the questions that mattered most. This gave every metric a clear purpose and produced a framework teams could start using immediately while working toward deeper insights over time.

We were impressed with the depth and strategy of this plan — and equally impressed with how Openfield learned to speak the language of libraries.

MIT Libraries Stakeholder

Isolated views across library catalogs, repositories, and discovery tools create a fragmented understanding of what needs the most attention.

Existing interview data couldn't show which issues had the greatest impact, while isolated analytics from individual systems (Primo, DSpace, Bento, etc.) couldn't tell complete stories about where discovery was breaking down or why.

We took signals from all these tools and layered them together into diagnostic clusters. This multi-system view revealed the real stories: whether “can’t find articles” meant a Primo search problem, a DSpace access issue, or confusion moving between systems.

Our framework of diagnostic clusters set the team up for action by enabling them to answer questions about impact and scale: Where is the problem? Why is it happening? For Whom? What now?

Our Approach: How to Implement a Framework to Improve University Library Systems

1: Surface the actual questions that need to be answered

We began by listening. We coordinated structured stakeholder sessions to surface open questions and real-world tensions.

2. Understand common user journeys

We worked with key stakeholders to map how users navigate through MIT’s various library systems in order to pinpoint where they encountered friction so that we could collect data that accurately measured the scope of these issues and guide priorities.

3. Understand technical feasibility for data collection

Our team co-designed instrumentation with MIT Library's analytics teams, assessing current capabilities, near-term opportunities, and longer-term goals to create a strategic roadmap within their constraints.

4. Cluster metrics into meaningful stories

Bundling individual metrics into diagnostic clusters revealed where, why, and for whom systems were failing. Single data points had too many exceptions, but clusters provided clear stories. Each metric included implementation guidance and purpose anchors, helping teams adapt the framework with confidence.

5. Introduce segmentation lenses for interpretation

Finally, we layered four interpretive lenses onto the framework (User Role, Intent Type, Entry Channel, and Search Input Style) to break down metrics and reveal not just what's breaking, but for whom, under what conditions, and with what impact.

Phased Implementation Strategy Leveraging Current Tools.

Teams responsible for different library tools each managed analytics systems independently, with many limitations on what changes they could make right away.

We co-created three feasibility tiers with these library teams to stage implementation: immediate actions, short-term tracking, and long-term strategic improvements.

Resulting in a clear roadmap enabling teams to act immediately within current systems while building toward long-term data collection improvements.

The Power of Coordinated Diagnostic Frameworks

The Problem: A Common Critical Pain Point is Revealed

"Users say they can't find articles even when they have the citation." It’s a familiar refrain. But without shared definitions or diagnostic tools, the cause is murky. Each team sees a piece of the problem, but no one sees the whole.

The Typical Response: Well-Intentioned Hunches

Different teams take action within their own domains — adjusting search fields, revising help text, tweaking backend structures. But without coordination, these fixes might not actually fix anything. The result? Continued user frustration, wasted time, and stalled progress.

How Our Framework Can Help Address User Frustrations for University Library Systems

Turn vague problems into specific, actionable insights. Here’s how:

Where’s the friction?

Looking at the relevant diagnostic cluster, we see that successful completion rates are low and the user journey involves an unusually high number of steps. That suggests a navigational or access-layer breakdown — not a data quality issue.

Why is it happening?

We dig deeper into system-level signals and find significant drop-off at the authentication or permissions step—especially when users are off-network or on mobile devices.

For whom?

The issue is concentrated among less experienced users accessing the system through indirect channels—like external search engines or deep links — rather than through the main homepage.

What now?

With a clear diagnosis, teams move from guesswork to targeted action.

Strategic Communication and Implementation Guidance.

Teams needed alignment around 50+ complex data stories while instrumentation details remained in flux, requiring clear guidance that could adapt as technical implementation evolved.

Created walk-through deck and handbook with purpose anchors connecting each metric to strategic questions, helping teams understand the 'why' behind measurements.

Teams remained aligned on what mattered most and could adjust to technical changes while maintaining strategic focus.

Summary of Best Practice Approach to Understanding and Acting Upon Data in University Library Systems

The Challenge

Creating buy-in across complex organizational hierarchies while maintaining technical rigor.

Our Approach

We positioned stakeholders as the experts and ourselves as facilitators, using iterative workshops to extract and organize their knowledge. This approach ensured the framework reflected real-world constraints while building genuine organizational ownership.

The Impact

Rather than imposing external solutions, we helped MIT Libraries systematize their existing expertise into an actionable measurement strategy.

About MIT Libraries

MIT Libraries serves the research and educational needs of the Massachusetts Institute of Technology community through multiple specialized systems and collections. The library system includes traditional academic resources, specialized collections for music and media, physical artifacts, and digital research tools, all integrated into a complex discovery ecosystem serving diverse user types from undergraduates to faculty researchers.

Transform User Problems into User Delight

Most EdTech teams know something isn't working — but knowing what to fix, how to fix it, and in what order requires specialized expertise.

Pinpoint Real User Problems

Our Research & Testing services can help you pinpoint what's actually broken and why.

Learn MoreMake Confident Product Decisions

Our Ideation & Planning services help you prioritize roadmap updates with business justification.

Learn MoreDesign and Validate Before You Build

Our Design & Prototyping services help you reduce engineering risks and costly reworks.

Learn More